diff --git a/LICENSE b/LICENSE

new file mode 100644

index 0000000..38dc1a3

--- /dev/null

+++ b/LICENSE

@@ -0,0 +1,21 @@

+MIT License

+

+Copyright (c) 2025 Contré Yavin

+

+Permission is hereby granted, free of charge, to any person obtaining a copy

+of this software and associated documentation files (the "Software"), to deal

+in the Software without restriction, including without limitation the rights

+to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

+copies of the Software, and to permit persons to whom the Software is

+furnished to do so, subject to the following conditions:

+

+The above copyright notice and this permission notice shall be included in all

+copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

+FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

+AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

+LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

+OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

+SOFTWARE.

\ No newline at end of file

diff --git a/README.md b/README.md

index 4ae8bf2..fd9732d 100644

--- a/README.md

+++ b/README.md

@@ -1,2 +1,698 @@

-# RT_GPU

-My own implementation of a raytracer engine using GPU in OpenGL

+## 1. Introduction

+

+### 🎯 Project Description & Purpose

+RT is a GPU‑accelerated raytracing engine built as a school project to explore real‑time rendering techniques using OpenGL and compute shaders. By dispatching a full‑screen triangle and leveraging GLSL compute shaders, RT shoots rays per pixel directly on the GPU, enabling interactive exploration of advanced lighting, materials, and even non‑Euclidean geometries.

+

+> 🚀 **Purpose:**

+> - Demonstrate end‑to‑end GPU ray generation and shading

+> - Experiment with custom denoising and video output pipelines

+> - Provide a modular framework for adding new primitives, materials, and effects

+

+### ✨ Key Features at a Glance

+- **Full‑screen Triangle Dispatch**: For efficient compute shader ray launches

+- **Custom Ray Generation**: From camera parameters (FOV, aspect ratio, lens)

+- **Material System**: With diffuse, reflective, glossy, transparent & emissive materials

+- **Volumetric Lighting**: Fully customizable volumetric fog

+- **Non‑Euclidean Portals**: Seamless teleportation of linked portals for non euclidian geomtry

+- **High‑Performance Traversal**: SAH‑BVH for scenes with tens of millions of triangles

+- **Custom Denoising**: Wavelet A-trous algorithm modified

+- **FFmpeg‑based Path Renderer**: Exporting video from user-made path throught the scene

+- **Mass clusterizing**: Allowing parallelizing on multiple GPU over the network

+

+### 🛠️ Technologies Used

+| Component | Description |

+| ------------------- | ---------------------------------------- |

+| **OpenGL** | Context creation, buffer management |

+| **GLSL Compute Shader** | Ray generation, acceleration structure traversal |

+| **C++20** | Core engine logic and data structures |

+| **FFmpeg** | Path‑through networked video renderer |

+

+### 📸 Screenshots & Rendered Examples

+

+

+

+

+

+

Real‑time raytraced Sponza interior with global illumination.

+

+

+

+

+

+

Portal‑based non‑Euclidean scene demonstration.

+

+

+

+

A simple emissive sphere "lightbulb" illuminating its surroundings.

+

+

+

+

+

Visual explanation from Noah Pitts

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

SAH BVH partitioning from Jacco Bikker’s article

+

+

+

+

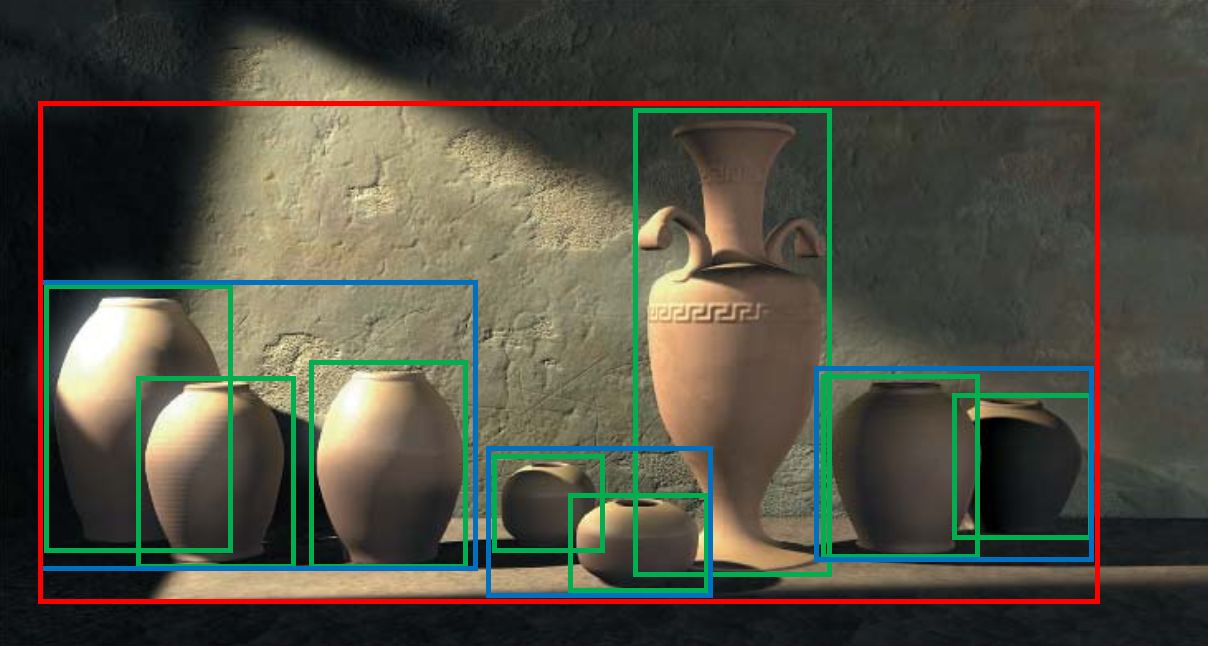

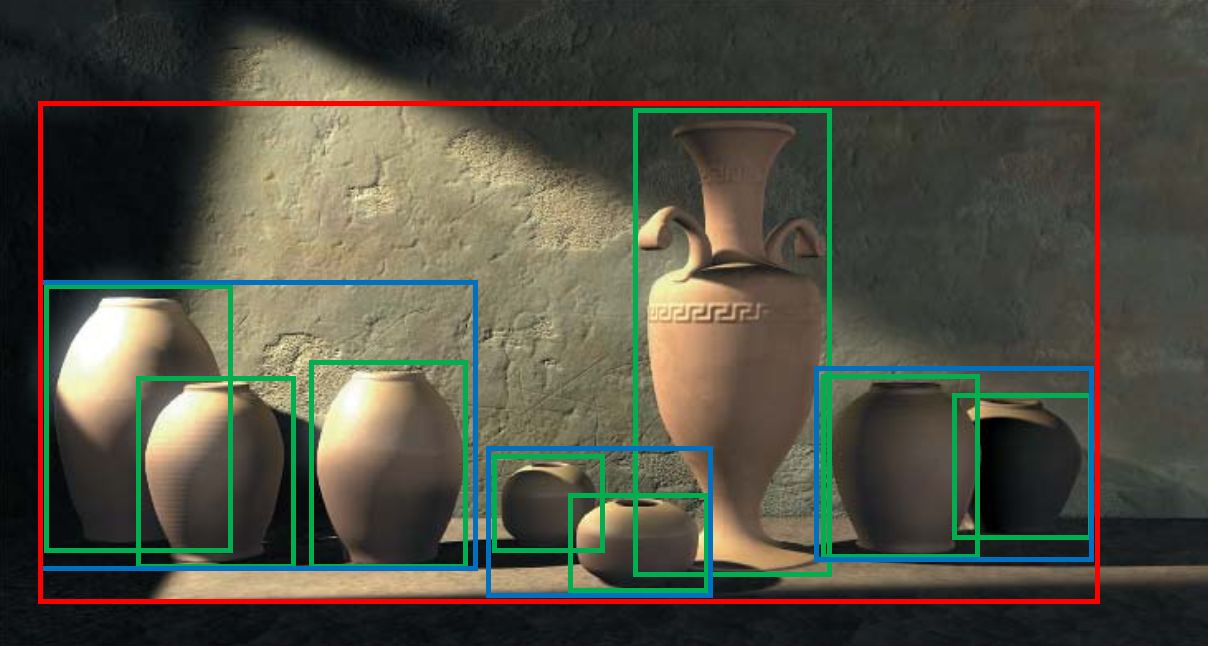

SAH BVH partitioning debug view from our RT

+

+

+

+

Render of heavy-scene with multi-million triangles

+

A-Trous filter denoising from Joost van Dongen’s paper

A-Trous filter denoising from Joost van Dongen’s paper

+

+#### 🧠 Key Idea

+

+The filter applies **iterative blurring with edge-aware weights**, controlled by color and normal similarity.

+Each iteration doubles the sampling step (à trous = “with holes”).

+This removes high-frequency noise while preserving edges and structure.

+

+⚙️ Custom Modifications

+Unlike traditional implementations, we modified the filter to run **only on the light output texture**, not on the full pixel buffer.

+

+🖼️ This preserves detail in texture-mapped objects, while still removing lighting noise from the path tracer.

+

+

+

+

+

Before Denoising

+

+

+

+

+

After Denoising

+

+

+

+

+

Screenshot of the ImGui interface with client frame stats and camera spline

+

+

+

+

Demo video of a rendered animation path

+

+  +

+

+

+

+ ⭐ Star this repository if you liked it! ⭐

+

+

+ Real‑time raytraced Sponza interior with global illumination.

+

+

+

+ Real‑time raytraced Sponza interior with global illumination.

+

+  +

+ Portal‑based non‑Euclidean scene demonstration.

+

+

+ Portal‑based non‑Euclidean scene demonstration.

+ +

+ Real‑time raytraced Sponza interior with global illumination.

+

+

+

+ Real‑time raytraced Sponza interior with global illumination.

+

+  +

+ Portal‑based non‑Euclidean scene demonstration.

+

+

+ Portal‑based non‑Euclidean scene demonstration.

+ +

+ A simple emissive sphere "lightbulb" illuminating its surroundings.

+

+

+

+ A simple emissive sphere "lightbulb" illuminating its surroundings.

+

+ +

+  +

+

+

+  +

+

+

+  +

+  +

+  +

+

+

+  +

+  +

+  +

+

+